2025 Was the Year AI Scaled Spend Versus Outcomes

2025 will be remembered as the year generative AI became unavoidable, and uncomfortable. Adoption by individuals raced ahead of enterprises, Capital flooded the ecosystem. Yet for many organizations, tangible returns lagged expectations.

An MIT study claiming that 95% of generative AI initiatives fail, rattled markets over the summer, exposing how quickly sentiment could shift beneath the weight of AI’s massive capex spend. However, Foundation models announced close to $1 trillion in AI infrastructure commitments creating a surge in revenue and valuations for AI datacenter Infrastructure and model training companies. This paradox defined 2025: AI proved it could scale investment, but not yet outcomes.

And yet, beneath the noise, something more durable took shape. Real revenue emerged across the AI stack, with broad adoption at scale for not only AI Infrastructure software (such as foundation models & training), but also for AI applications such as co-pilots & coding (horizontal AI application) and healthcare (vertical AI application). The signal was not hype, it was uneven, but undeniable progress.

Where the Market Actually is

Enterprise AI software spending surged from $12B in 2024 to $39B in 2025, now accounting for over 5% of the global SaaS market. Nearly half of this spend (49%) is concentrated in foundation models, training, and AI infrastructure, while 42% is directed toward Horizontal AI applications and the remaining 9% toward Vertical AI applications*.

Within Horizontal AI, Copilots and Coding assistants are the dominant use cases, driving 45% and 27% of horizontal AI spend, respectively. On the Vertical side, Healthcare and Legal lead adoption, accounting for 43% and 19% of Vertical AI spend*. Importantly, AI adoption today remains largely bottom-up, led by individuals through product-led growth (PLG): over 30% of use-case adoption is still PLG, nearly 5× higher than typical SaaS adoption rates. In contrast, enterprise-driven agentic AI adoption remains nascent, representing just 9% of horizontal AI spend.

2026: From Tools to Transformation

We believe 2026 will mark a decisive shift. Enterprises will move beyond incremental gains from copilots and chatbots and work on transforming workflows, functions and entire organizations by onboarding AI agents to work side by side with their people. The winners will not be companies that add AI features, but those that redesign systems: technical and organizational for an AI first operating model.

We also believe that new technologies such as world models in physical AI and gaming, will gain more traction in the next 3-5 years as AI provides the ability to create new worlds and experiences instantly.

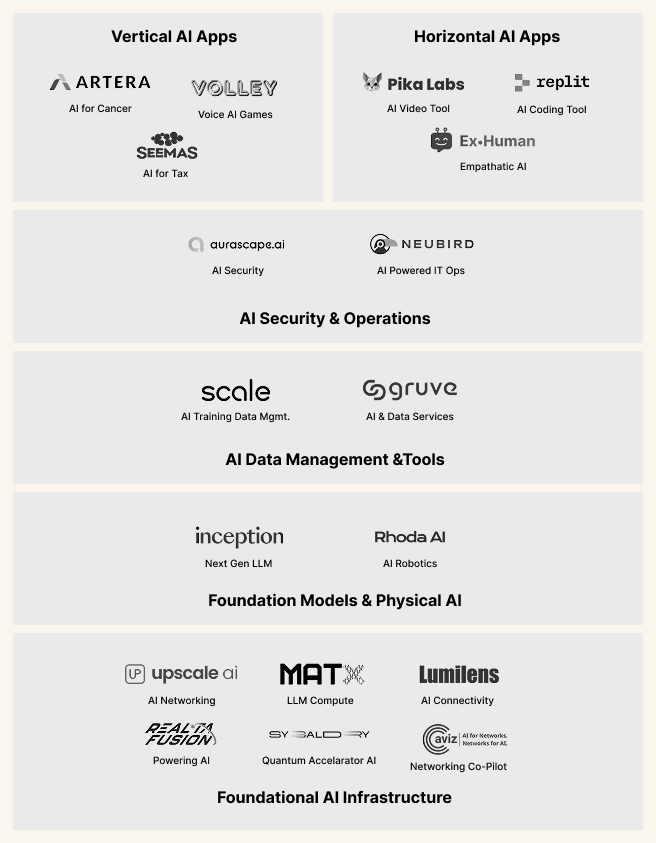

At AIspace Ventures, our investment strategy reflects these directions and we have focused on investing in three key layers:

- Foundational AI Infrastructure for AI datacenters (Hardware+Software)

- AI Infrastructure software (Foundation Models, Data Management & Tools and Security & Operations) and

- AI Applications (Horizontal + Vertical)

1. Foundational AI Infrastructure: Scaling Network Interconnects for AI

When Silicon Waits, Value Leaks. Modern AI clusters train and infer using thousands of GPUs that must continuously exchange parameters and activations, along with increasing context sizes. This means dense and mixture-of-experts (MOE) models will require a flexible heterogeneous architecture that optimizes “tokens per dollar per watt”. Yet most available network interconnects use legacy or proprietary technologies and deliver far below what is needed.

The result is a quiet but costly problem: some of the most expensive silicon ever built, increasingly sits idle, waiting on data rather than computing.

This interconnect bottleneck is now driving a structural split in the stack:

- The Physical Layer, constrained by physics, energy, and heat

- The Network Layer, constrained by coordination, latency, and determinism at scale

Our portfolio maps intentionally to both:

- Lumilens addresses optical connectivity by replacing copper with photonic interconnects. Moving data with light unlocks terabit‑scale bandwidth, dramatically improving energy efficiency, and reducing thermal load lowering total system cost while enabling the bandwidth density AI workloads now demand.

- Upscale AI tackles a critical network scalability challenge for GPUs/XPUs, without which AI infrastructure scaling hits a wall. Its SkyHammer scale-up silicon architecture is an AI optimized, high‑bandwidth, low‑latency fabric purpose-built for AI clusters in a rack and across racks in a data center. By supporting open standards such as ESUN, UALink, and UEC, SkyHammer enables deterministic performance and multivendor interoperability, increasingly essential to enable heterogeneous AI compute and optimize “tokens per dollar per watt”.

1. Foundational AI Infrastructure: Scaling Compute and Power Management for AI

Scalable, cost efficient, compute and energy. Advanced AI hardware is not only expensive but is also power-hungry and generates intense heat. So optimizing “tokens per dollar per watt” also requires exploring options such as fusion power and specialized AI hardware to exponentially scale training and inference affordably.

We invested in Realta Fusion that provides near-limitless, clean power through compact, modular fusion reactors, using a magnetic mirror design to fuse atoms for cost efficient heat and power. Specialized hardware from NVIDIA (Rubin CPX for Inference Prefill) and AI accelerators startups such as Groq (for Inference Decode) and our portfolio company MatX (for high volume LLM pre-training and production inference), offer superior power efficiency and speed for specific AI compute tasks at much lower costs. Another portfolio company, Sygaldry, uses multi-modal architecture to combine complementary qubit technologies to enable quantum accelerated AI servers to exponentially scale training and inference, affordably.

2. Foundation Models and the Emergence of Physical AI

Consolidation at the Top, New Innovation at the Edges

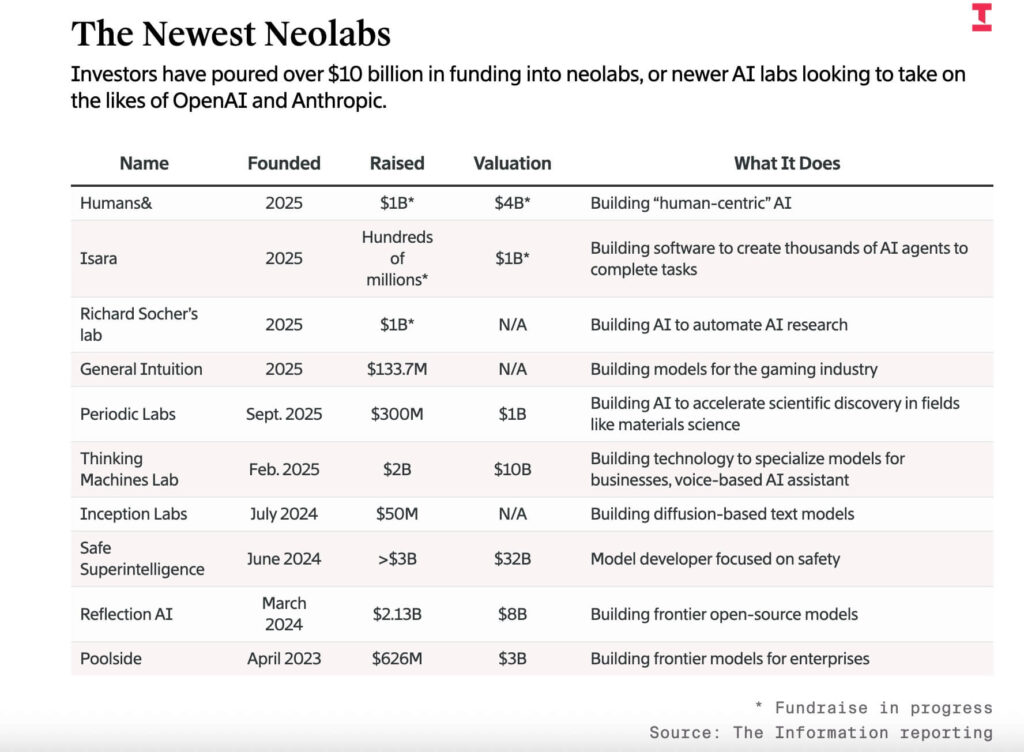

The foundation-model landscape and overwhelming majority of model-training spend has consolidated around five incumbents: OpenAI, Anthropic, Google, Meta, and xAI. This is mainly driven by capex intensity, data gravity, and distribution advantages. In parallel, a second wave of “neolabs”, or newer AI labs has emerged, raising $10B+ in the past two years. Notable examples include: Humans& ($1B raised at $4B valuation), Mira Murati’s Thinking Machine Labs (actively raising at ~$50B valuation), Richard Socher’s Lab ($1B), and Inception (>$50M seed raised at ~$260M valuation).

Information published a list of leading Neo Labs (table attached below)-this includes Inception -a startup we invested in pre-seed and seed rounds

Neo Labs provide Architectural Alternatives such as:

- Diffusion-based language models: Within our portfolio, Inception Labs (founded by Stanford professor cofounders Stefano Ermon, Aditya Grover and Volodymyr Kuleshov, who co-invented image diffusion) raised >$50M seed round to develop diffusion-based language models. Diffusion is structurally different from autoregressive transformers like GPT-5 or Gemini 2.5, which generate tokens sequentially. Diffusion models reason more holistically, iteratively updating an entire representation, which can unlock 10x faster inference at ~10x lower cost

- Liquid Neural Networks:. A compelling architectural alternative is emerging from MIT CSAIL (Daniela Rus’s lab): liquid neural networks, now commercialized by Liquid AI. Unlike transformers, which use fixed architectures, liquid networks can continuously learn and adapt in real-time after deployment, making them uniquely suited for robotics, control systems, and edge environments

These 2 approaches– diffusion-based language models and liquid neural networks– represent the strongest early signs of architectural divergence away from transformer dominance.

Physical AI: Applying these Models to the Real World

As foundation models evolve, AI is beginning to find traction in physical systems, with two clear areas emerging: autonomous vehicles and humanoid/industrial robotics

- Autonomous Vehicles:Waabi raised $200M (Oct 2025) and partnered with Volvo. Their differentiated approach: instead of collecting millions of miles of real-world driving data like Waymo, they continuously generate synthetic training scenarios to train their autonomous “driver”. Other companies in this space include Wayve that is raising $2B at $10B valuation

- Humanoids & Robotics: Humanoid robotics have had a wave of public demos this year, including Tesla’s Optimus (new iteration), 1X Technologies’ NEO, Figure 02 (industrial integration) and Unitree G1 & H1 (versatile with open SDK)

These are promising, but still limited in real-world capabilities. Our investment in Rhoda AI, which raised $230M at a ~$1B valuation to build industrial robots focused on heavy lifting, a capability most humanoid platforms cannot perform (many struggle to lift >50 lbs without losing stability). CEO Jagdeep Singh frames heavy lifting as a “first-principles unlock” for industrial automation, delivering immediate ROI.

We believe that in the next 3-5 years, physical AI systems will expand beyond transformer-based control models towards more adaptive approaches like diffusion architectures or liquid neural networks, which are significantly more efficient for edge constraints (e.g. power, latency, on-device inference).

3. Data Management, Tools, and the Rise of Agentic Interfaces

A pivotal shift in 2025 has been the rise of the Model Context Protocol (MCP), released in October 2024. You can think of it as a universal adapter that lets different AI models talk to the same tools and data sources (e.g. Google Drive, Slack, GitHub, databases, internal APIs). Both a16z and Sequoia have publicly called MCP one of the most important emerging standards in AI tooling, arguing that MCP-native companies could gain a distribution advantage as enterprises deploy mixed stacks (GPT + Claude + Gemini). The MCP ecosystem is evolving rapidly, comprising 3 key components that must work cohesively for large-scale enterprise adoption: 1. Applications 2. MCP Servers and 3. Intelligent MCP Orchestrators.

Our belief is that Application vendors are best positioned to eventually dominate this space. Their deep understanding of their systems allows them to host MCP servers within the same cloud environment as their applications, ensuring optimal performance, security, and governance. Customers already trust them to manage authorization models, making it more seamless for them to enforce access control within MCP interfaces. Significant value will also be captured by Intelligent MCP orchestrators—the systems capable of interpreting human intent and executing complex workflows seamlessly across diverse enterprise ecosystems-an essential enabler for all Agentic applications.

Data Evaluation and Management

Our investment in ScaleAI saw a meaningful milestone with Meta’s investment deal (49% ownership at $29B valuation). Now, the market is evolving further toward synthetic data and in-house labeling, with Scale alone generating $200M+ revenue in DoD contracts. Yet, there is emerging whitespace for vertical-specific data solutions, as we highlighted earlier that vertical AI adoption remains early. Multiple startups are now focused on vertical-specific data quality, simulation, and validation tooling by creating marketplaces for high-end experts in various verticals to work with companies that need specialized model training. One such startup, Mercor, recently raised $350M at ~$10B valuation. OpenAI’s recent hiring of hundreds of former investment bankers to train models for automating banking workflows also confirms the need for vertical-specific data evaluation. Data and AI service providers such as our portfolio company Gruve will also benefit from this need.

4. Security and Operations: A New Attack Surface

As AI systems move into production, they introduce new attack surfaces that traditional security platforms weren’t built to address:

- Code-based vulnerabilities as AI creates code for Enterprises

- Model poisoning (corrupting training data)

- Data leakage through model outputs

- Prompt injection (tricking models into ignoring instructions)

- Agent-based vulnerabilities as AI performs actions on behalf of users

Leaders like Crowdstrike and Palo Alto Networks excel at conventional cybersecurity but were not initially designed with these AI-specific concerns in mind. This gap is now large enough to create new AI-security categories such as AI TRISM (Gartner). Recent M&A activity such as Veeam acquiring Securiti AI ($1.7B, Oct 2025) for AI-native data-resilience and governance, and Zscaler acquiring SPLX (Nov 2025) to expand into full-stack AI security, underscore this shift.

As generative AI becomes integrated across enterprises, the ability to definitively answer “What data can this AI system access?” and “How is this data being used?” becomes non-negotiable. The winners in this category will create unified platforms that provide complete visibility into sensitive data location, context-aware classification, and intelligent protection that balances security with the practical needs of AI-driven innovation.

Our portfolio company, Aurascape, came out of stealth in April 2025, with a $50M raise to build a security platform purpose-built for AI systems and model-driven attack vectors (AI TRISM). Their research arm, Aura Labs, identified a vulnerability in OpenAI’s ChatGPT Agent Mode in August 2025 and collaborated with their team to patch it, showcasing meaningful early external validation.

Another emerging area is AI-powered automation of Security Operations Centers (SOCs) to address the structural market problem of the SOC workforce crisis (approximately 4.7 million cybersecurity professionals currently employed worldwide with an estimated 3.4 million unfilled positions). Our bet in this area is NeuBird, which enables Autonomous Incident Response with Agentic SRE, using AI agents as operational copilots for security teams. NeuBird’s agents reduce incident triage and resolution time by 50%+, acting like automated “tech consultants” that handle routine investigation and remediation.

We believe that going forward AI security and DevOps will be driven increasingly by purpose-built AI-first architectures, offensive research and operational reality, not legacy pattern matching, block/allow methods or compliance checklists.

5. Application Layer (Horizontal + Vertical): Where ROI Finally Compounds

As the AI infrastructure layer matures, applications are scaling quickly across various horizontal use cases and several enterprise verticals.

Co-pilots and Coding are the Top 2 Horizontal AI breakout use cases, driving 45% and 27% of the Horizontal AI spend respectively. Our bet in this area is Replit which released an AI coding tool that reached $100M ARR within 6 months of launch and has announced that they expect to reach $1B ARR by end of 2026-Replit was named by Ramp as the fastest growing software vendor.

Horizontal AI use cases for Customer support drove only ~5% of Horizontal AI market spend, but is expected to gain traction in 2026. Some of the leading startups in this area include: Sierra (raised $635M at $10B valuation), Cresta (raised $282M at $1.6B valuation) and Decagon (raised $100M at $1.5B valuation). All three share the same pattern: highly repetitive work, easy-to-measure outcomes (resolution time, CSAT), and clear ROI, which should make enterprise adoption easier.

Consumer AI is another horizontal growth area, though monetization here lags. Menlo reports ~63% of consumers use AI tools, but only ~3% pay. Another Horizontal AI company we invested in, Pika, now with 16.5M users, launched its standalone Predictive Video app aimed at Gen Z/Alpha creators. It sits between entertainment and creative tooling, similar to OpenAI’s Sora and Meta’s Vibes. As consumer monetization continues to materialize, this is an area to monitor closely.

Healthcare and Legal are the Top 2 AI verticals driving 43% and 19% of the Vertical AI spend respectively. Three major recent Healthcare AI deals totaled $300M+:

- Hippocratic raised $126M (now in 50+ health systems)

- Hyro raised $45M (30M+ patient interactions processed)

- AAVantgarde raised $141M (AI for drug discovery)

Menlo estimates $1.4B in healthcare AI spend in 2025, growing 2.2x faster than the broader AI market. Healthcare use cases have numerous workflows where ROI is easy to measure including documentation, prior authorization, patient engagement. Within this category, our portfolio company, Artera, received £1.9M NHS funding in November 2025 to validate its prostate-cancer diagnostic technology across 1.6M patients. This opens the door to UK/EU expansion following its July FDA approval.

Legal AI and financial services are tracking a similar adoption pattern:

- Expensive manual labor (associates billing $500–800/hr)

- Repeatable tasks (contract review, financial modeling, reconciliation)

- Quantifiable outcomes (hours saved, error reduction)

At AIspace Ventures, we track and invest where these transitions are structural, not cosmetic and where architecture, not hype, defines the next decade of value creation.

*Market sizing numbers used in this report are sourced from Pitchbook, Menlo Ventures and Mckinsey reports on 2025 AI adoption trends.